When I first came across OpenAI’s new Agent Builder, I almost scrolled past it. Honestly, how many times have we seen “AI agent builders” pop up over the past two years? Most of them promise the world and end up being either too simple or too much work for what they’re worth. So my first reaction was, nah, I’ve seen this movie before.

But curiosity got the better of me, and I’m actually glad I gave it a shot. After a couple of days of tinkering, I realized this isn’t just another chatbot tool. It feels more like an AI workshop, where you don’t just write prompts but actually design how an agent thinks, remembers, and connects with your tools. It’s part of a bigger bundle called AgentKit, which also includes things like ChatKit, Evals, and the Connector Registry.

So, instead of being a toy to play with, it feels like OpenAI is trying to hand us a proper toolbox.

First Impressions

Opening it for the first time, I won’t lie — it was a bit overwhelming. There are boxes, connectors, menus… it looked like some sort of mind map software but for AI. I randomly dragged things around just to see what would happen, and more than once I broke the setup without knowing why. For about half an hour, I was convinced I’d need a tutorial video just to survive.

But after poking around, things started to make sense. You can literally drag a block that says “Prompt,” link it to another block that says “API call,” add a condition, and then connect it to an “Output.” The whole logic is right there on the canvas. Once I saw it working, I had this little “aha” moment. No more scrolling through 500 lines of code wondering where I missed a comma.

It reminded me of sketching an idea on paper — except here, the sketch actually runs.

What Makes OpenAI Agent Builder (AgentKit) Different

The first thing that surprised me is how much safety comes built in. Normally, if you’ve ever tried building bots, you know the pain: sanitizing inputs, redacting sensitive info, adding filters so the AI doesn’t say something awkward. With Agent Builder, a lot of that happens in the background. I tested a few messy cases and it quietly handled them without me writing a single line of “extra safety” code.

The other part I didn’t expect was memory. I set up a fake support bot to test it out, gave it a “ticket number,” and kept chatting with it. Ten messages later, it still remembered the ticket. Most simple builders would’ve forgotten halfway through, and you’d have to repeat yourself. This one didn’t — and that felt like a big step forward.

Debugging is also less painful here. Instead of redeploying every tiny tweak, you can just hit test, see what broke, and fix it instantly. It makes experimenting a lot less frustrating.

Playing with Use Cases

I tried a few mini-projects to see where it would shine.

- Customer Support: This is the obvious one. FAQs, basic troubleshooting, order tracking — it can handle that fine. When things get too complicated, you can route the conversation to a human.

- Internal Team Bot: This one I really liked. I set up a tiny agent that could answer “Where’s the latest version of the pricing sheet?” and it actually fetched the doc. For teams drowning in files, this is gold.

- Onboarding Assistant: Imagine signing up for a service and being greeted by a bot that doesn’t just spit out links but actually walks you through setup and even pings you reminders later. That’s doable here.

The best part is that you don’t have to start from scratch every time. There are templates for these scenarios. I picked the support bot template, swapped in my data, and within an hour I had something that felt usable.

Pricing & Availability

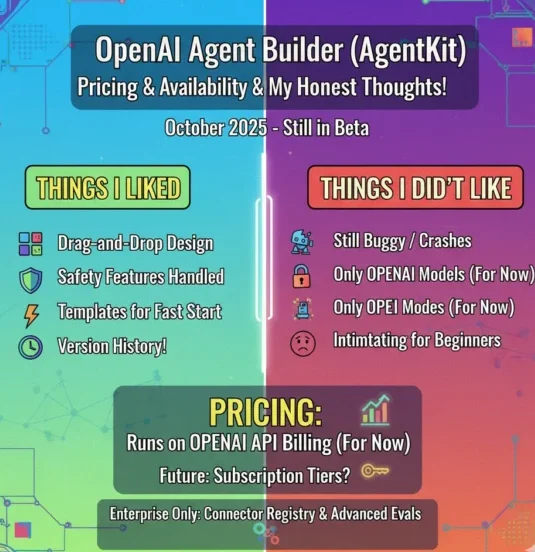

As of October 2025, it’s still in beta. Some features, like the Connector Registry and the advanced evaluation tools, are only for enterprise accounts. Right now, there isn’t separate pricing — it runs on the regular OpenAI API billing.

But if I had to guess, OpenAI will roll out proper subscription tiers once it’s ready for the public. Probably something that scales based on the number of agents you run or how many requests you send per month.

Things I Liked (and Didn’t)

What I liked most:

- The drag-and-drop design feels natural after you get past the initial confusion.

- Safety features are handled in the background, which saves a lot of time.

- Templates make it faster to get started.

- It has version history, so you’re not terrified of breaking everything.

What I didn’t like:

- It’s still buggy — I had a few crashes that forced me to refresh.

- You can only use OpenAI models (for now).

- Total beginners might find it intimidating at first.

Is It Like Zapier?

A lot of people are comparing this to Zapier or Make, but honestly, it’s not the same thing. Zapier is great at “if this happens, then do that.” It’s automation. Agent Builder feels closer to reasoning. It doesn’t just react — it processes context, decides, and then responds.

If Zapier is like a checklist app, Agent Builder is like the intern who reads the checklist, asks follow-up questions, and even suggests a better way to do the task. Different leagues.

Final Takeaway

So, after a few days of experimenting, would I recommend keeping an eye on Agent Builder? Absolutely.

It’s not perfect — the beta bugs are real, and you’ll probably get frustrated a few times before it clicks. But once it does, the potential is obvious. The visual workflow speeds up prototyping, the safety features reduce headaches, and the fact that it fits neatly into OpenAI’s broader ecosystem makes everything connect smoothly.

For now, I’d call it a promising work in progress. If you’re just curious, you might want to wait for the polished version. But if you’re serious about building useful AI agents, even at this stage, it’s worth diving into.

What excites me most is that it doesn’t feel like just another AI demo. It feels like the start of a proper toolkit — the kind you can build real tools and products on. And that, to me, is a big deal.

If you’re interested in how AI is shaping smartphone software, check out our detailed review of OxygenOS 16 (2025) and OnePlus AI updates.